In 2017 I saw Lara Fotogenerator on a blog. The program enabled people to submit drawn pictures to the trained program and receive a modified image in return. The images output by the program were funny or scary, and always unexpected. Immediately, I saw potential for collaborating with the machine to derive new images by training the program myself. (see below for process)

The animations in This Is How We Walk on the Moon were made this way. Setting the parameters for machine learning occurs in an AI software program. Because I don’t code, I collaborated with a web app developer to access the tool and build my own system. This web app developer became a link in the communication chain between me and the machine. He helped me befriend the neural network tool to generate individual frames which I sequenced as a motion picture.

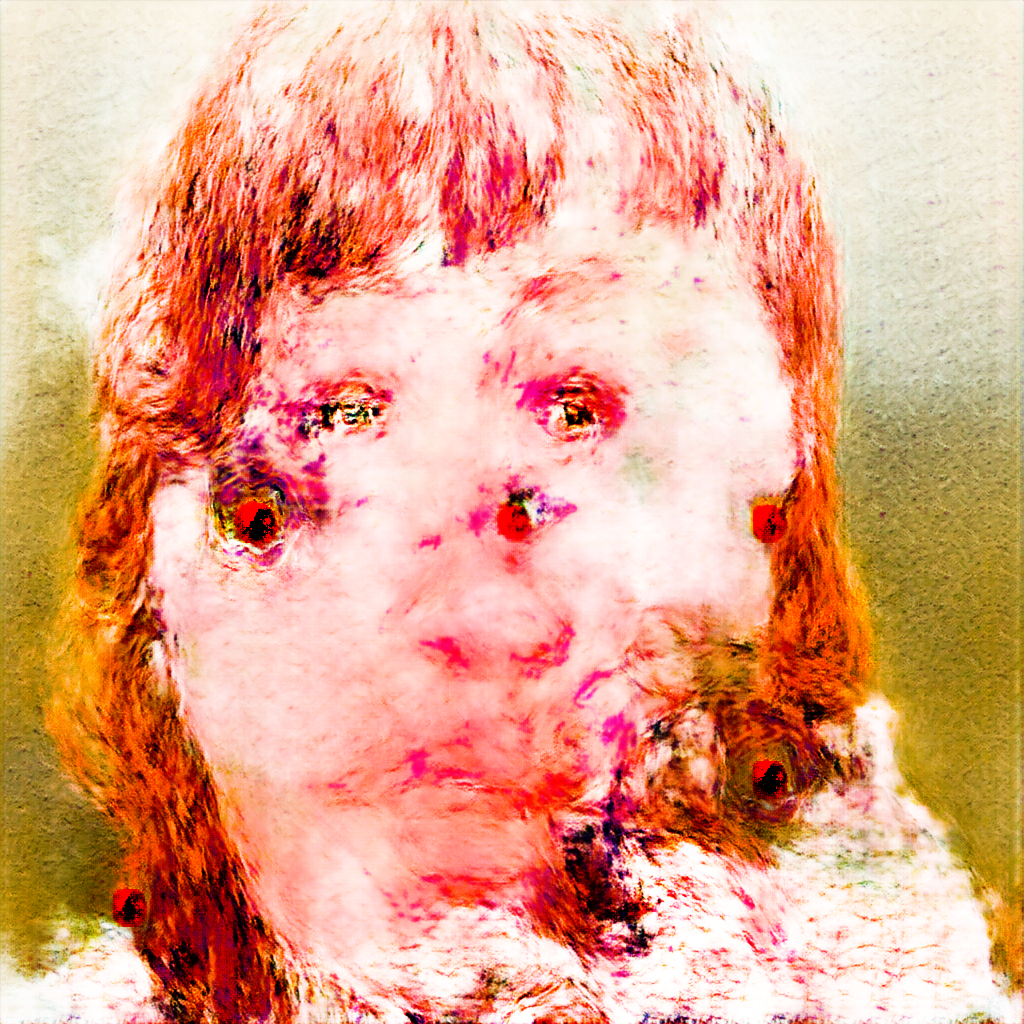

The machine learning tool processes my drawings through pictures of myself, my sister and my nieces, in just the same way that each of my experiences, my total vantage point, filters through the sisterly origination of my identity. Similarly, in the resulting images, the distinction between my hand and the digital synthesis is difficult to define. In some ways they look like photos. In other ways they look like drawings. Ultimately, they are a fusion of the two.

[Zorge, Tinka, “Help Us Test Artificial Intelligence!”

For an experiment inspired by the work of artist Christopher Hesse,

Dutch media outlet De Kennis Van Nu used a neural net tool called

Pix2Pix to create images of one of their news anchors, Lara.]

Drawing + Machine Learning Model Training

Components

Neural network training program: pix2pix

System for running programs like pix2pix, that accommodate machine learning: Tensor Flow

Coding language: Python, a scripting language

Software developer collaborator: Andy Kelleher Stuhl

Pix2pix

Pix2pix trains a neural network (image-based artificial intelligence) in two stages.

Stage 1:

These two images (photo and its tracing) are input as one file into pix2pix.

50+ files of photos from the same shoot and their corresponding drawing are also input.

Through its internal figuring process, it learns color mapping from the photo to the drawing.

Process: Pix2pix uses a Generator component to create an image based on each drawing. A Discriminator component compares the Generator’s image to its corresponding photo, and communicates to the Generator what must be revised for them to match. The Generator then creates a new image, which the Discriminator again analyzes, and reports back to the Generator. This guess and check method repeats for a number of iterations defined in the code. We used 250. The resulting image, therefore, is as close to the original photo as the Generator and Discriminator could refine. That knowledge is then locked in as artificial intelligence.

Stage 2:

A new drawing is input into the trained neural network (this is an animation frame).

Pix2pix assigns color information from what it learned in Stage 1 and outputs a .png file.

This final file is sequenced with the set of animation frames to create a moving image.